Ethics label developed for AI systems

The working paper “AI Ethics: From Principles to Practice – An interdisciplinary framework to operationalise AI ethics” shows how general AI ethics principles can be put into practice across Europe. The authors are members of the “AI Ethics Impact Group” established by the Bertelsmann Stiftung and VDE. Three ITAS researchers are involved: Rafaela Hillerbrand, Torsten Fleischer, and Paul Grünke.

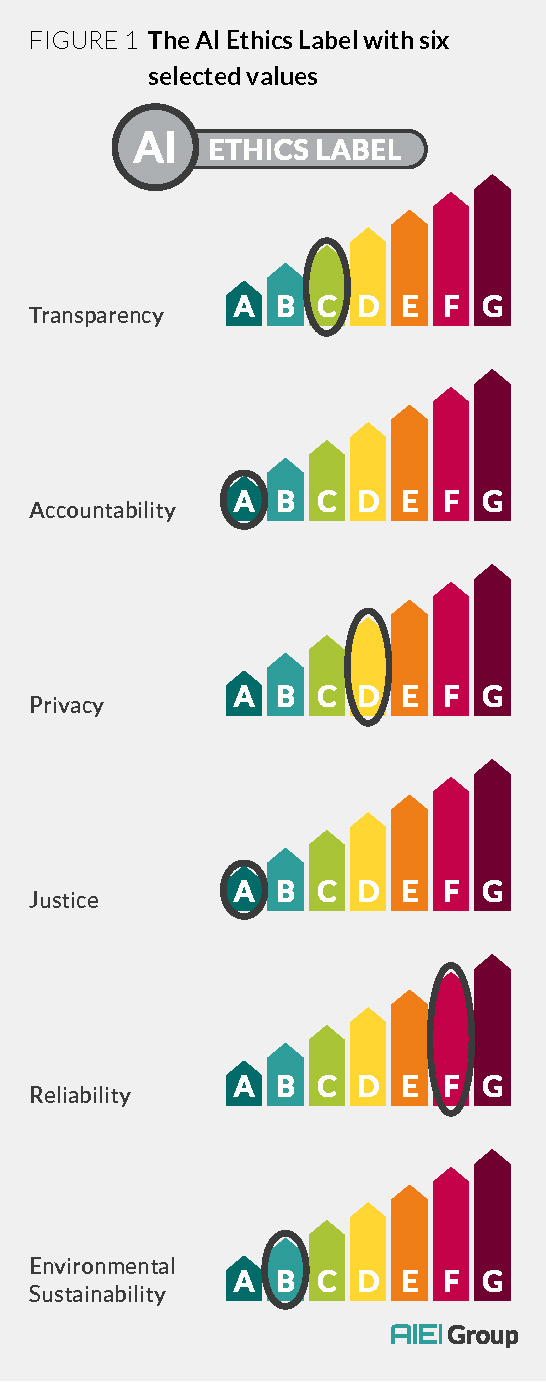

The paper focuses on the design of an ethics label for AI systems, similar in appearance to energy efficiency labels for refrigerators and other electronic devices. The label helps producers of AI systems classify the quality of their products. This makes them comparable on the market and the ethical requirements transparent. The label is based on the values of transparency, accountability, privacy, fairness, reliability, and environmental sustainability.

The requirements to be met for the different levels can be determined with the help of the so-called “WKIO model”, which combines values, criteria, indicators, and observables.

Risk matrix for different applications

The researchers point out in their publication that it is important in which context the algorithmic systems are used. For example, a system used in agriculture requires a lower degree of transparency than those used in the processing of personal data. In order to verify whether AI use is ethically acceptable for a specific purpose, the researchers have developed a so-called risk matrix.

The authors consider the presented solutions as tools that help political actors and regulatory bodies to specify requirements for AI systems and control them effectively. Companies and organizations that want to use algorithmic systems could also use the model. The tools must now be tested in practice and then further elaborated, say the authors. (09.04.2020)

Further links and information:

- Working Paper AI Ethics: From Principles to Practice in full text (PDF)

- Further information and video on the working paper